How to Integrate Experiments Into an MMM Platform: A Practical Guide

If you have been running marketing experiments for years, geo tests, conversion lift studies, or other tests, it is a reasonable expectation to include the learnings from those experiments into your marketing measurement.

In fact, we hear this question constantly in tender processes and technical Q&A: “We’ve done lots of experiments. How can we integrate those into your platform?”

This post walks through the exact answer, without hand-waving, and explains how existing experiments can be used inside Sellforte to improve Marketing Mix Model accuracy. This is written for marketing and analytics teams who already invest in experimentation and want to make that work carry forward instead of starting from scratch.

Start by Collecting Experiments in the Experiment Library

Before experiments can be leveraged, they need to live in a single place. In Sellforte Experiments, that place is the Experiment Library. Experiment library is simply a place where all of your experiments are stored. Below is a screenshot from Sellforte's Experiment library:

You can store all major marketing experiment types in the librar,y including GeoLift tests and Conversion Lift tests done within advertising platforms.

There are three practical ways to add historical experiments, depending on how they were originally run.

Method 1 for Adding tests: Analyze Past GeoLift Tests in Sellforte

If you have run geo-based experiments before, you can bring them into Sellforte by re-analyzing them directly in the platform.

This is done by clicking "Create new experiment" as shown in the screenshot below..

... And adding experiment specifications, such as timing, type, and test/control groups, as shown the screenshot below.

After the test is analyzed, you'll get a full-blown analysis of the test, including iROAS estimate, confidence statistics and AI summary.

Here's an example of a test analysis:

This approach is especially useful if you are unsure whether your previous experiments were properly analyzed, or just to get a second opinion on your previous tests.

Method 2 for Adding tests: Integrate Lift Tests From Ad Platforms

Many brands nowadays use lift studies offered advertising platforms.

It might be a surprise to some, but those tests can be integrated into the Experiment Library as well. This allows platform-native lift tests to live alongside geo experiments and other methodologies, instead of being locked inside individual tools. Below is an example of a Meta Conversion Lift study imported into the Experiment library.

The technical guidance for adding ad platform tests differ by platform - check the latest guidance from Sellforte directly.

Method 3 for Adding tests: Upload Past Experiments Using a Template

For experiments that do not fall neatly into the first two categories, Sellforte provides a structured template for uploads.

This works well for:

- Custom experiments run with agencies

- One-off tests analyzed internally

- Legacy experiments that still have solid documentation

As long as the experiment setup and results can be clearly described, they can be added to the library. Ask the latest template from Sellforte before uploadin.

Using Experiments to Calibrate Marketing Mix Models

Once experiments are in the Experiment Library, they are not just stored for reference. They can be used as one data source for calibrating the Marketing Mix Model.

The main benefit of this is straightforward: Increase model accuracy.

Experiments provide can provide ground truth signals that help anchor the MMM to real, observed outcomes rather than relying only on historical correlations.

Experiments are practically taken into account in the formation of informative prior distribution (or prior) as part of the Bayesian framework, as shown in this chart. Compared to non-informative priors, informative priors provide give the Marketing Mix Model more guidance where the actual ROI of a channel can be found.

A Critical Point: Only Use High-Quality Experiments

Not all experiments should be used for calibration.

Quality can vary significantly based on:

- Test design

- Sample size and duration

- Geo or audience selection

- How the results were analyzed

Including low-quality experiments can do more harm than good. When calibrating MMM with experiments, it is essential to be selective and intentional.

This is a common misconception. More experiments do not automatically mean better models. Better experiments do.

See Sellforte Experiments in Action

If you want to see how this works in practice, Sellforte offers a public demo that shows the Experiment Library and calibration workflow. No sign-up or registration is required.

Conclusions

If you have already run experiments, that work does not need to be lost or repeated. By collecting experiments into a structured library and using only high-quality tests for calibration, you can directly improve the accuracy and credibility of your Marketing Mix Models.

That is the core idea behind how Sellforte integrates experiments into the platform.

Authors

Lauri Potka is the Chief Operating Officer at Sellforte, with over 15 years of experience in Marketing Mix Modeling, marketing measurement, and media spend optimization. Before joining Sellforte, he worked as a management consultant at the Boston Consulting Group, advising some of the world’s largest advertisers on data-driven marketing optimization. Follow Lauri in LinkedIn, where he is one of the leading voices in MMM and marketing measurement.

You May Also Like

These Related Stories

What is Causal Attribution in Marketing?

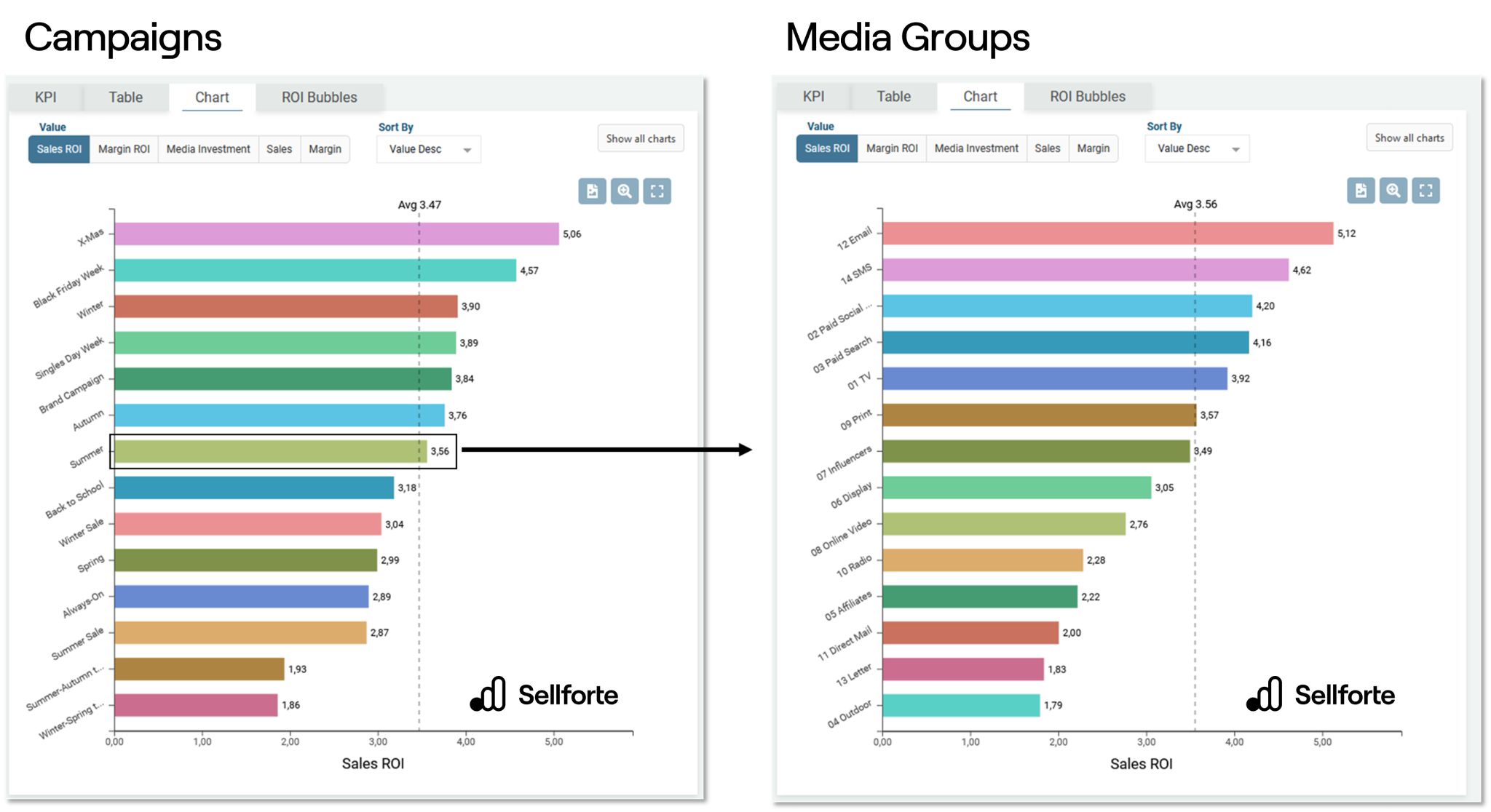

How Can You Unlock the Full Potential of MMM with Campaign Dimensions?